In the previous post, we discussed the basic concepts of Open Banking. This article presents an overview of the Account and Transaction APIs and the changes introduced by v2.0.0 of Open Banking UK Specification.

The Read/Write APIs of Open Banking UK Specification v1.1.0 were released on November 2017 and consisted of:

This article focuses on the information domain of the Accounts API and let's discuss the Payments API in a future post.

Let's go through an overview of the Accounts flow:

V1.1.0 of the Account and Transaction API, allowed the data exchange between banks and TPPs with regard to the following resources:

Each resource is exposed via API endpoints and the corresponding responses are defined in the specification. Each resource can be retrieved in bulk or per a given account and each retrieval is secured by a set of predefined permission codes all of which are defined in the specification.

V2.0.0 of the Read/Write APIs were released in February 2018 and the major change introduced was the list of new resources that are supported by the Account Information API. This effectively expanded the information domain handled by the Account APIs.

The newly added resources are:

The Read/Write APIs of Open Banking UK Specification v1.1.0 were released on November 2017 and consisted of:

This article focuses on the information domain of the Accounts API and let's discuss the Payments API in a future post.

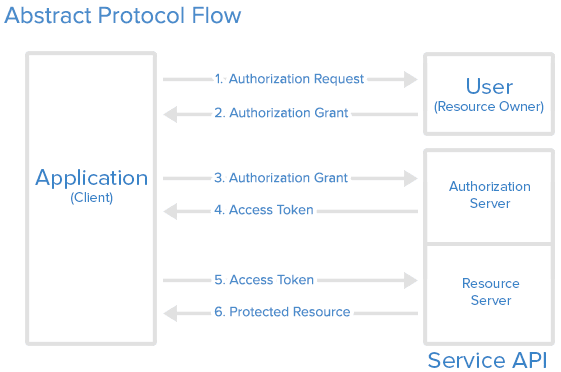

Let's go through an overview of the Accounts flow:

- The PSU requests account information from the AISP

- The AISP requests the ASPSP to initiate authorization and consent flow

- The ASPSP authenticates the user upon which the user provides the consent

- The AISP requests account information from the ASPSP

- The ASPSP sends the requested information to the AISP

V1.1.0 of the Account and Transaction API, allowed the data exchange between banks and TPPs with regard to the following resources:

- Account details - The resource that represents the account to which credit and debit entries are made e.g. account ID, account type, and currency

- Balances - Representation of the net increases and decreases in an account (AccountId) at a specific point in time. The data model includes account ID, credit/debit indicator, and available balance value

- Beneficiaries - Trusted beneficiaries information including beneficiary ID and creditor account details

- Direct debits - Direct debit information including identification code, status, and previous payment information

- Products - Information pertaining to products that are applicable to accounts, e.g. name and type

- Standing Orders - Standing orders information including frequency and first/next/final payment information

- Transactions - Posting to an account that results in an increase or decrease to a balance. The data model includes transaction history details including the amount, status, balance, and date and time

Each resource is exposed via API endpoints and the corresponding responses are defined in the specification. Each resource can be retrieved in bulk or per a given account and each retrieval is secured by a set of predefined permission codes all of which are defined in the specification.

V2.0.0 of the Read/Write APIs were released in February 2018 and the major change introduced was the list of new resources that are supported by the Account Information API. This effectively expanded the information domain handled by the Account APIs.

The newly added resources are:

- Account Requests - Authorizations to access to the account

- Offers - Details related to offers that are available for the accounts such as offer type, limit, and description

- Party - Information about the logged in user or account owner such as name, email, and address

- Scheduled Payments - Single one-off payments scheduled for a future date with the details including the instructed amount, date and time, and creditor account details

- Statements - Associated information for each statement on the account including the start date, end date, and statement amounts

Unlike Account and Transaction API, the Payment Initiation API was not affected by the v2.0.0 release. The Payment Initiation API includes API definitions that facilitate payments and a payment request includes information such as instruction type, payment amount, creditor details, debtor details, and remittance information. The specification includes comprehensive descriptions of the API endpoints, headers, data models of the requests and responses as well as required security features.

Cheers!